Welcome to deploying your PyTorch model on Algorithmia!

This guide is designed as an introduction to deploying a PyTorch model and publishing an algorithm even if you’ve never used Algorithmia before.

Note: this guide uses the web UI to create and deploy your Algorithm. If you prefer a code-only approach to deployment, review Algorithm Management after reading this guide.

Table of Contents

Prerequisites

Before you get started deploying your Pytorch model on Algorithmia there are a few things you’ll want to do first:

Save your Pre-Trained Model

You’ll want to do the training and saving of your model on your local machine, or the platform you’re using for training, before you deploy it to production on the Algorithmia platform.

Create a Data Collection

Host your data where you want and serve it to your model with Algorithmia’s Data API.

In this guide we’ll use Algorithmia’s Hosted Data Collection, but you can host it in S3 or Dropbox as well. Alternatively, if your data lies in a database, check out how we connected to a DynamoDB database.

First, you’ll want to create a data collection to host your pre-trained model.

-

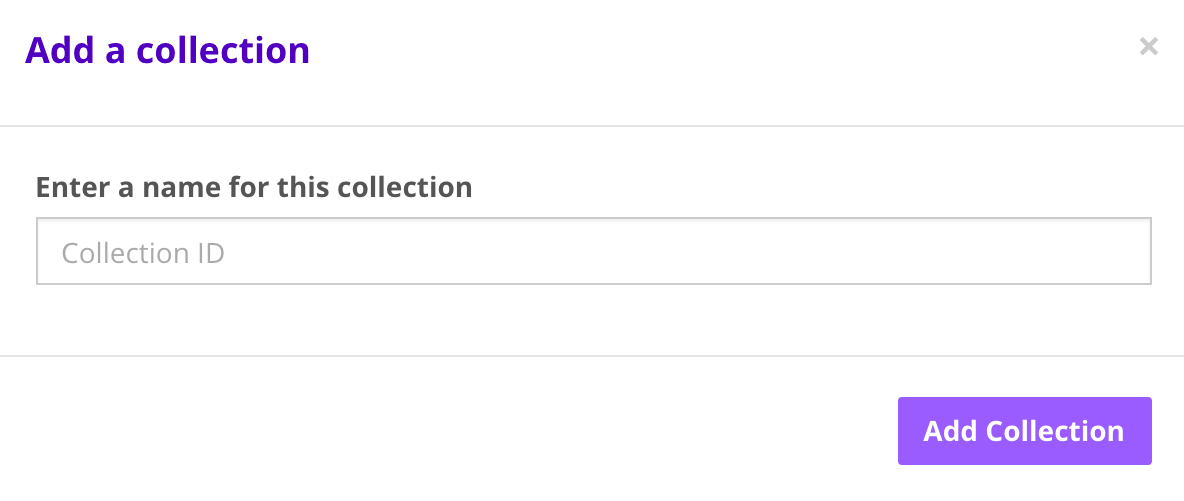

Log into your Algorithmia account and create a data collection via the Data Collections page.

-

Click on “Add Collection” under the “My Collections” section.

-

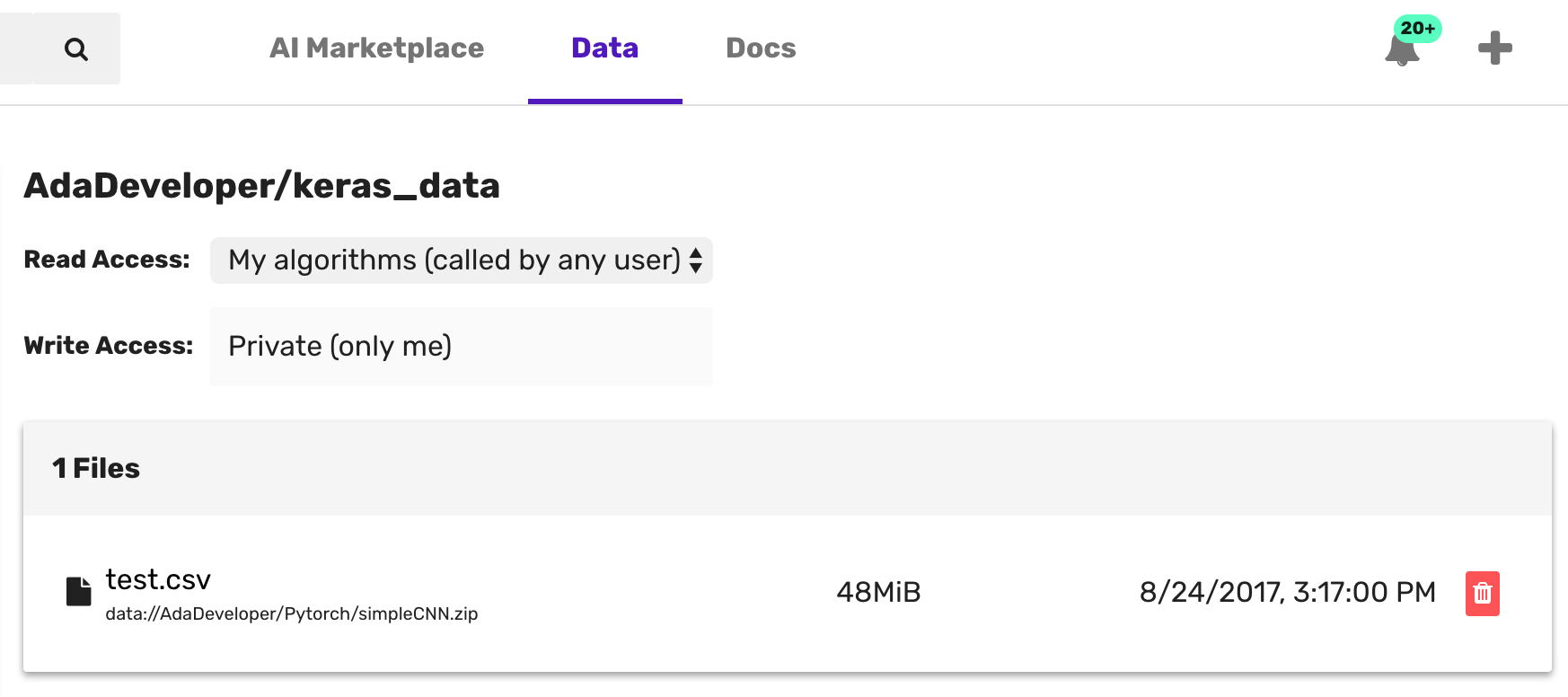

After you create your collection you can set the read and write access on your data collection.

For more information check out: Data Collection Types.

Note, that you can also use the Data API to create data collections and upload files.

Host Your Model File

Next, upload your pickled model to your newly created data collection.

-

Load model by clicking box “Drop files here to upload”

-

Note the path to your files: data://username/collections_name/file_name.zip

Create your Algorithm

Hopefully you’ve already followed along with the Getting Started Guide for algorithm development. If not, you might want to check it out in order to understand the various permission types, how to enable a GPU environment, and use the CLI.

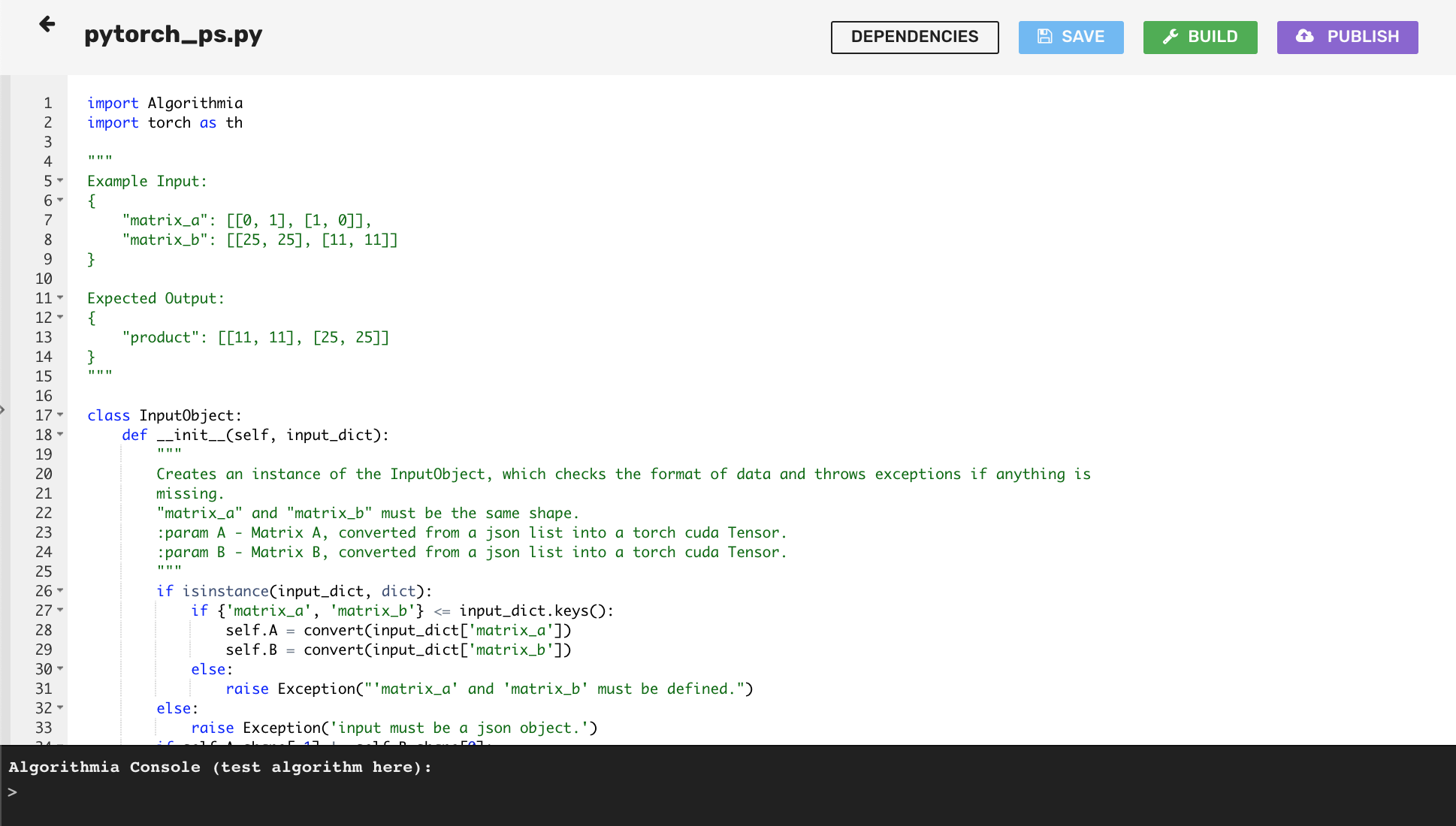

Once you’ve gone through the Getting Started Guide, you’ll notice that when you’ve created your algorithm, there is boilerplate code in the editor. If you’ve chosen the Python 3.X beta PyTorch version when you created your algorithm, then you’ll see more verbose boilerplate code. Otherwise it will be a simple “Hello World” algorithm.

The main thing to note about the algorithm is that it’s wrapped in the apply() function.

The apply() function defines the input point of the algorithm. We use the apply() function in order to make different algorithms standardized. This makes them easily chained and helps authors think about designing their algorithms in a way that makes them easy to leverage and predictable for end users.

Go ahead and remove the boilerplate code below that’s inside the apply() function at bottom of page, but leave the apply() function intact:

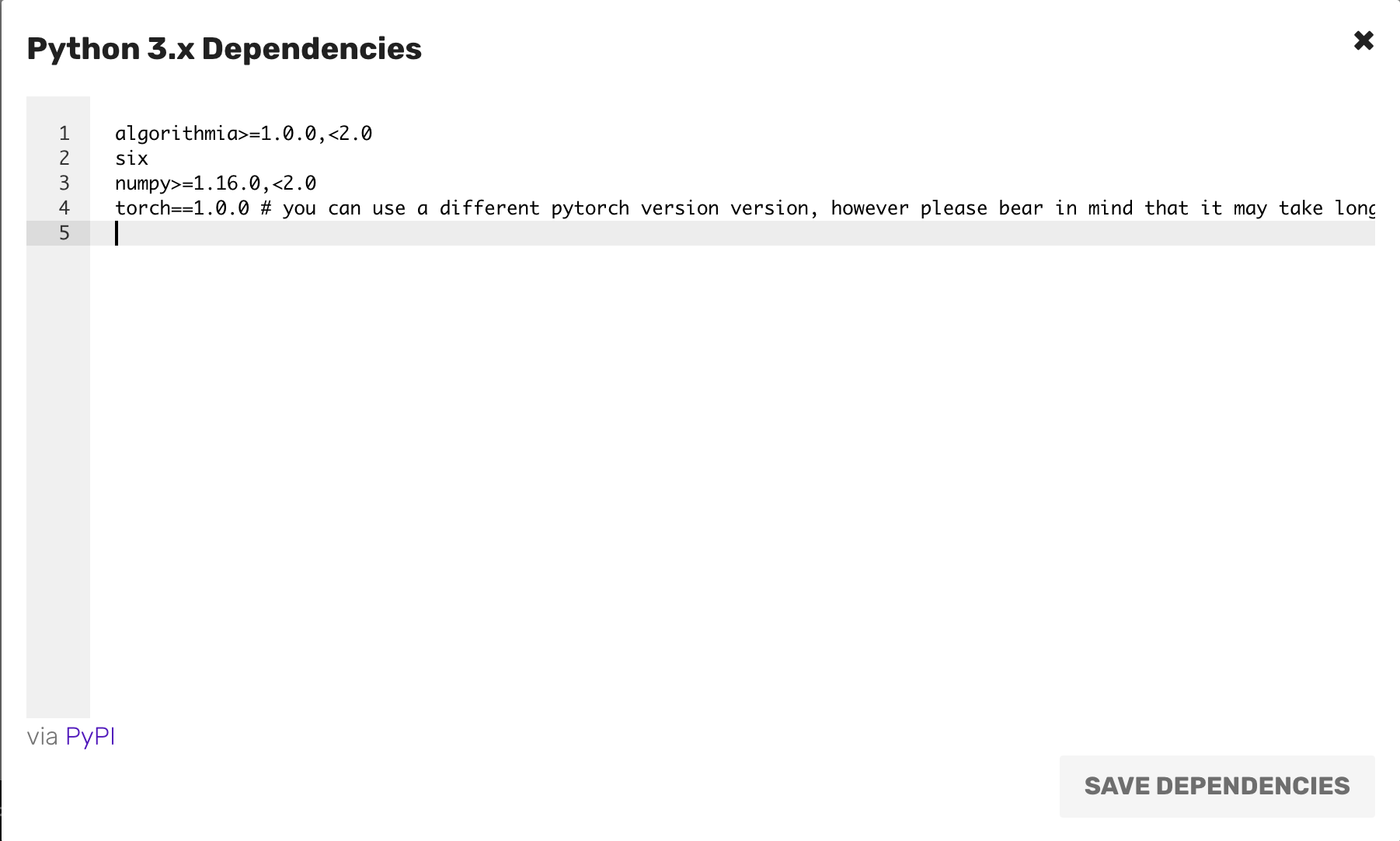

Set your Dependencies

Now is the time to set your dependencies that your model relies on.

- Click on the “Dependencies” button at the top right of the UI and list your packages under the required ones already listed and click “Save Dependencies” on the bottom right corner.

Load your Model

Here is where you load and run your model which will be called by the apply() function.

When you load your model, our recommendation is to preload your model in a separate function external to the apply() function.

This is because when a model is first loaded it can take time to load depending on the file size.

Then, with all subsequent calls only the apply() function gets called which will be much faster since your model is already loaded.

If you are authoring an algorithm, avoid using the ‘.my’ pseudonym in the source code. When the algorithm is executed, ‘.my’ will be interpreted as the user name of the user who called the algorithm, rather than the author’s user name.

Note that you always want to create valid JSON input and output in your algorithm. For examples see the Algorithm Development Guides.

Example Hosted Model (Main):

"""

An example of how to load a trained model and use it

to predict labels for labels in the CIFAR-10 dataset.

"""

import Algorithmia

from PIL import Image

import torch

import torchvision

import torchvision.transforms as transforms

client = Algorithmia.client()

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

def preprocessing(image_path):

image_file = client.file(image_path).getFile().name

# Normalize and resize image

normalize = transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

preprocess = transforms.Compose(

[transforms.Resize((32, 32)),

transforms.ToTensor(), normalize])

img = Image.open(image_file)

img.load()

output = preprocess(img)

return(output)

def load_model():

file_path = "data://YOUR_USERNAME/YOUR_DATACOLLECTION/demo_model.t7"

model_file = client.file(file_path).getFile().name

return torch.jit.load(model_file).cuda()

model = load_model()

def predict(image):

image_tensor = image.unsqueeze_(0).float().cuda()

# Predict the class of the image

outputs = model(image_tensor)

_, predicted = torch.max(outputs, 1)

return predicted

# API calls will begin at the apply() method, with the request body passed as 'input'

# For more details, see https://algorithmiaio.github.iohttps://algorithmiaio.github.io/algorithm-development/languages

def apply(input):

# data://YOUR_USERNAME/YOUR_DATACOLLECTION/sample_animal1.jpg

processed_data = preprocessing(input)

prediction = predict(processed_data)

predictions = [classes[prediction[j]] for j in range(1)]

return "Predicted class is: {0}".format(predictions)Where it return the predicted class of the image such as: “Plane”

If you run into any problems or need help, don’t hesitate to reach out to our team!

Publish your Algorithm

Last is publishing your algorithm. The best part of deploying your model on Algorithmia is that users can access it via an API that takes only a few lines of code to use! Here is what you can set when publishing your algorithm:

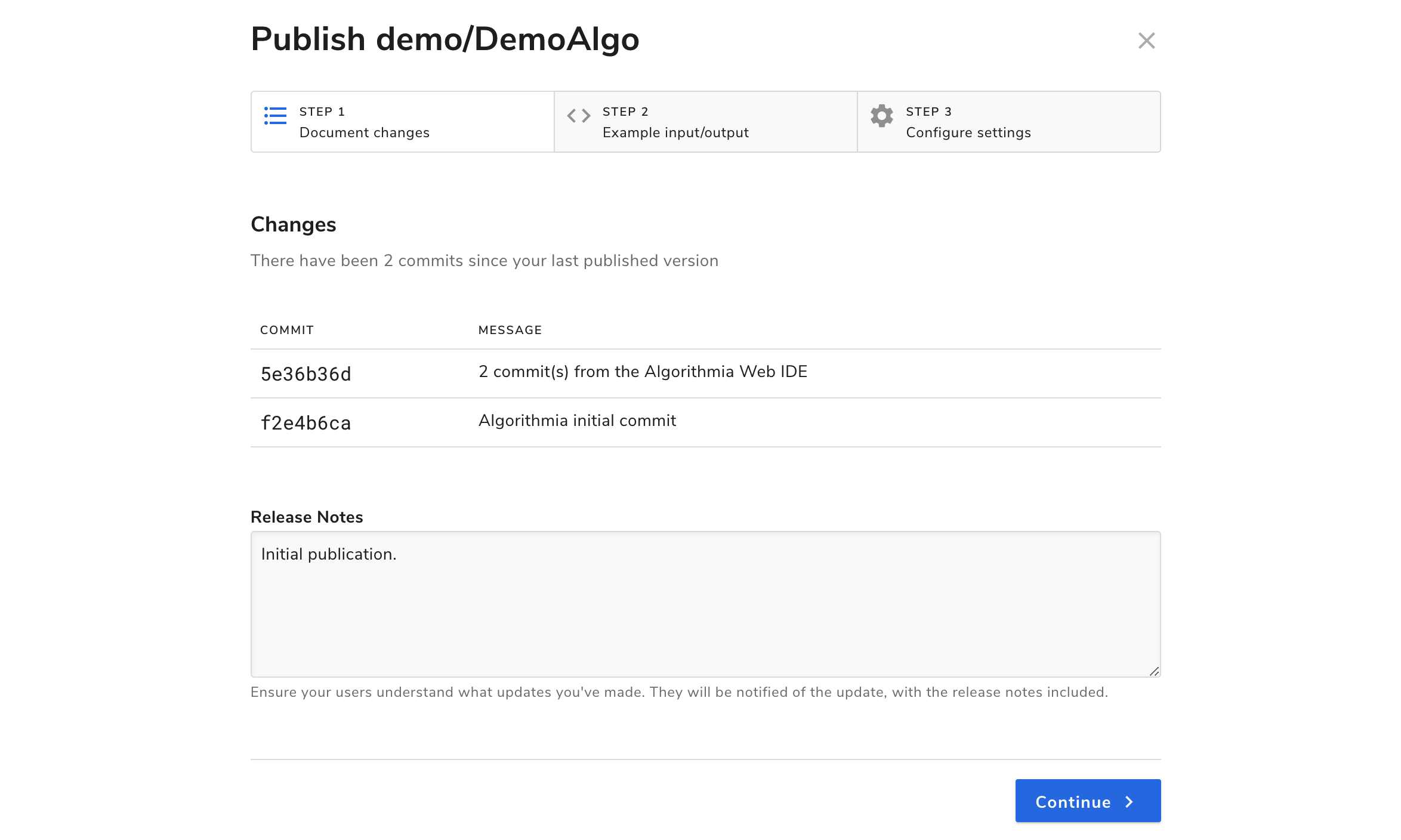

On the upper right hand side of the algorithm page you’ll see a purple button “Publish” which will bring up a modal:

In this modal, you’ll see a Changes tab, a Sample I/O tab, and one called Versioning.

If you don’t recall from the Getting Started Guide how to go through the process of publishing your model, check that out before you finish publishing.

Working Demo

If you would like to check a demo out on the platform you can find it here: PyTorchJitGPU

That’s it for hosting your Pytorch model on Algorithmia!

PyTorch

PyTorch